This PR will avoid load pullrequest.Issue twice in pull request list

page. It will reduce x times database queries for those WIP pull

requests.

Partially fix#29585

---------

Co-authored-by: Giteabot <teabot@gitea.io>

When read the code: `pager.AddParam(ctx, "search", "search")`, the

question always comes: What is it doing? Where is the value from? Why

"search" / "search" ?

Now it is clear: `pager.AddParamIfExist("search", ctx.Data["search"])`

just some refactoring bits towards replacing **util.OptionalBool** with

**optional.Option[bool]**

---------

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Since `modules/context` has to depend on `models` and many other

packages, it should be moved from `modules/context` to

`services/context` according to design principles. There is no logic

code change on this PR, only move packages.

- Move `code.gitea.io/gitea/modules/context` to

`code.gitea.io/gitea/services/context`

- Move `code.gitea.io/gitea/modules/contexttest` to

`code.gitea.io/gitea/services/contexttest` because of depending on

context

- Move `code.gitea.io/gitea/modules/upload` to

`code.gitea.io/gitea/services/context/upload` because of depending on

context

Fix#24662.

Replace #24822 and #25708 (although it has been merged)

## Background

In the past, Gitea supported issue searching with a keyword and

conditions in a less efficient way. It worked by searching for issues

with the keyword and obtaining limited IDs (as it is heavy to get all)

on the indexer (bleve/elasticsearch/meilisearch), and then querying with

conditions on the database to find a subset of the found IDs. This is

why the results could be incomplete.

To solve this issue, we need to store all fields that could be used as

conditions in the indexer and support both keyword and additional

conditions when searching with the indexer.

## Major changes

- Redefine `IndexerData` to include all fields that could be used as

filter conditions.

- Refactor `Search(ctx context.Context, kw string, repoIDs []int64,

limit, start int, state string)` to `Search(ctx context.Context, options

*SearchOptions)`, so it supports more conditions now.

- Change the data type stored in `issueIndexerQueue`. Use

`IndexerMetadata` instead of `IndexerData` in case the data has been

updated while it is in the queue. This also reduces the storage size of

the queue.

- Enhance searching with Bleve/Elasticsearch/Meilisearch, make them

fully support `SearchOptions`. Also, update the data versions.

- Keep most logic of database indexer, but remove

`issues.SearchIssueIDsByKeyword` in `models` to avoid confusion where is

the entry point to search issues.

- Start a Meilisearch instance to test it in unit tests.

- Add unit tests with almost full coverage to test

Bleve/Elasticsearch/Meilisearch indexer.

---------

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Fix#25627

1. `ctx.Data["Link"]` should use relative URL but not AppURL

2. The `data-params` is incorrect because it doesn't contain "page". JS

can simply use "window.location.search" to construct the AJAX URL

3. The `data-xxx` and `id` in notification_subscriptions.tmpl were

copied&pasted, they don't have affect.

The `q` parameter was not rendered in pagination links because

`context.Pagination:AddParam` checks for existance of the parameter in

`ctx.Data` where it was absent. Added the parameter there to fix it.

- Move the file `compare.go` and `slice.go` to `slice.go`.

- Fix `ExistsInSlice`, it's buggy

- It uses `sort.Search`, so it assumes that the input slice is sorted.

- It passes `func(i int) bool { return slice[i] == target })` to

`sort.Search`, that's incorrect, check the doc of `sort.Search`.

- Conbine `IsInt64InSlice(int64, []int64)` and `ExistsInSlice(string,

[]string)` to `SliceContains[T]([]T, T)`.

- Conbine `IsSliceInt64Eq([]int64, []int64)` and `IsEqualSlice([]string,

[]string)` to `SliceSortedEqual[T]([]T, T)`.

- Add `SliceEqual[T]([]T, T)` as a distinction from

`SliceSortedEqual[T]([]T, T)`.

- Redesign `RemoveIDFromList([]int64, int64) ([]int64, bool)` to

`SliceRemoveAll[T]([]T, T) []T`.

- Add `SliceContainsFunc[T]([]T, func(T) bool)` and

`SliceRemoveAllFunc[T]([]T, func(T) bool)` for general use.

- Add comments to explain why not `golang.org/x/exp/slices`.

- Add unit tests.

Change all license headers to comply with REUSE specification.

Fix#16132

Co-authored-by: flynnnnnnnnnn <flynnnnnnnnnn@github>

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

This PR adds a context parameter to a bunch of methods. Some helper

`xxxCtx()` methods got replaced with the normal name now.

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

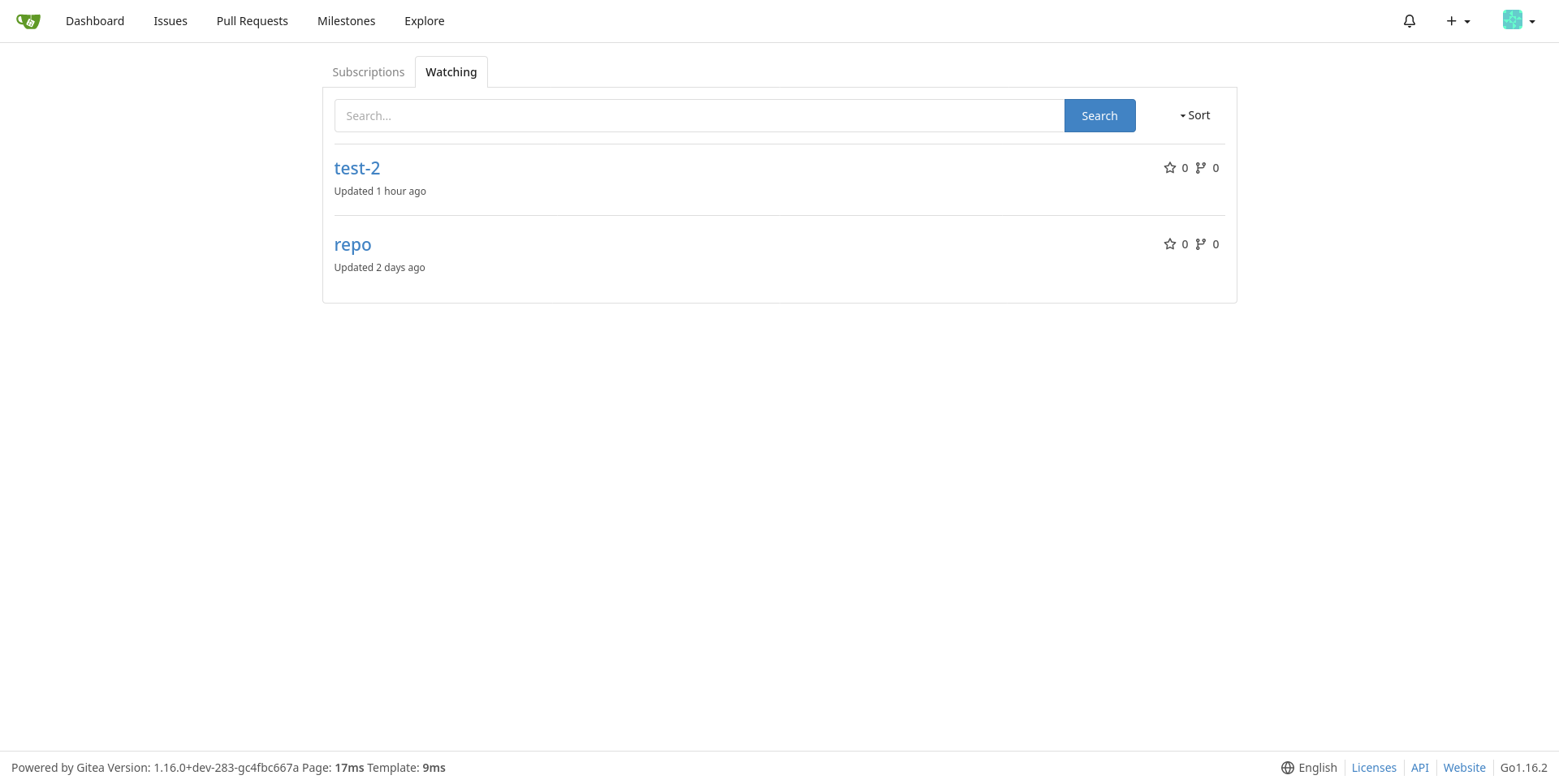

Adds GitHub-like pages to view watched repos and subscribed issues/PRs

This is my second try to fix this, but it is better than the first since

it doesn't uses a filter option which could be slow when accessing

`/issues` or `/pulls` and it shows both pulls and issues (the first try

is #17053).

Closes#16111

Replaces and closes#17053

Co-authored-by: Lauris BH <lauris@nix.lv>

Co-authored-by: 6543 <6543@obermui.de>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

If the context is cancelled `.NotificationUnreadCount` in a template can

cause an infinite loop with `ctx.ServerError()` being called, which

creates a template that then calls `.NotificationUnreadCount` calling

`GetNotificationCount()` with the cancelled context resulting in an

error that calls `ctx.ServerError`... and so on...

This PR simply stops calling `ctx.ServerError` in the error handler code

for `.NotificationUnreadCount` as we have already started rendering and

so it is too late to call `ctx.ServerError`. Additionally we skip

logging the error if it's a context cancelled error.

Fix#19793

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Reusing `/api/v1` from Gitea UI Pages have pros and cons.

Pros:

1) Less code copy

Cons:

1) API/v1 have to support shared session with page requests.

2) You need to consider for each other when you want to change something about api/v1 or page.

This PR moves all dependencies to API/v1 from UI Pages.

Partially replace #16052

There are multiple places where Gitea does not properly escape URLs that it is building and there are multiple places where it builds urls when there is already a simpler function available to use this.

This is an extensive PR attempting to fix these issues.

1. The first commit in this PR looks through all href, src and links in the Gitea codebase and has attempted to catch all the places where there is potentially incomplete escaping.

2. Whilst doing this we will prefer to use functions that create URLs over recreating them by hand.

3. All uses of strings should be directly escaped - even if they are not currently expected to contain escaping characters. The main benefit to doing this will be that we can consider relaxing the constraints on user names and reponames in future.

4. The next commit looks at escaping in the wiki and re-considers the urls that are used there. Using the improved escaping here wiki files containing '/'. (This implementation will currently still place all of the wiki files the root directory of the repo but this would not be difficult to change.)

5. The title generation in feeds is now properly escaped.

6. EscapePound is no longer needed - urls should be PathEscaped / QueryEscaped as necessary but then re-escaped with Escape when creating html with locales Signed-off-by: Andrew Thornton <art27@cantab.net>

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Apache `ProxyPassReverse` only works for Location, Content-Location and URI headers on HTTP redirect responses, it causes more problems than it resolves. Now all URLs generated by Gitee have the correct prefix AppSubURL. We do not need to set `ProxyPassReverse`.

* fix url param

* use AppSubURL instead of AppURL in api/v1

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Followup from #16562 prepare for #16567

* Rename ctx.Form() to ctx.FormString()

* Reimplement FormX func to need less code and cpu cycles

* Move code into own file

When marking notifications read the results may be returned out of order

or be delayed. This PR sends a sequence number to gitea so that the

browser can ensure that only the results of the latest notification

change are shown.

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: 6543 <6543@obermui.de>

* refactor routers directory

* move func used for web and api to common

* make corsHandler a function to prohibit side efects

* rm unused func

Co-authored-by: 6543 <6543@obermui.de>

* Use AJAX for notifications table

Signed-off-by: Andrew Thornton <art27@cantab.net>

* move to separate js

Signed-off-by: Andrew Thornton <art27@cantab.net>

* placate golangci-lint

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Add autoupdating notification count

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Fix wipeall

Signed-off-by: Andrew Thornton <art27@cantab.net>

* placate tests

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Try hidden

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Try hide and hidden

Signed-off-by: Andrew Thornton <art27@cantab.net>

* More auto-update improvements

Only run checker on pages that have a count

Change starting checker to 10s with a back-off to 60s if there is no change

Signed-off-by: Andrew Thornton <art27@cantab.net>

* string comparison!

Signed-off-by: Andrew Thornton <art27@cantab.net>

* as per @silverwind

Signed-off-by: Andrew Thornton <art27@cantab.net>

* add configurability as per @6543

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Add documentation as per @6543

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Use CSRF header not query

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Further JS improvements

Fix @etzelia update notification table request

Fix @silverwind comments

Co-Authored-By: silverwind <me@silverwind.io>

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Simplify the notification count fns

Signed-off-by: Andrew Thornton <art27@cantab.net>

Co-authored-by: silverwind <me@silverwind.io>

Unfortunately there appears to be potential race with notifications

being set before the associated issue has been committed.

This PR adds protection in to the notifications list to log any failures

and remove these notifications from the display.

References #10815 - and prevents the panic but does not completely fix

this.

Signed-off-by: Andrew Thornton <art27@cantab.net>

* Use relative URLs

* Notifications - Mark as read/unread

* Feature of pinning a notification

* On view issue, do not mark as read a pinned notification